Inevitability Weekly # 2

AI, Energy (for AI) and Corporate Earnings (AI Capex)

When I started this idea last week I had the thought “what will I do if there’s not enough engaging material one week?” That was certainly not the issue this week, in fact, it’s the opposite as this week was full of interesting developments in AI, energy and corporate earnings.

AI Bubble Talk

It’s tough to read anything finance or tech related these days without some discussion of if AI is a bubble or not. With stock markets at an all time high and capital expenditures from big tech companies continuing to increase at an unprecedented rate, bubble talks dominate every corner of finance and tech media. As with any debate, it’s helpful to understand both sides, to learn from people with skin in the game and get perspectives from people who are on the ground with a front row seat, before eventually coming to our own conclusions from which we must make investment decisions.

AI Capex Boom

This piece from Sparkline capital does a great job substantiating the big tech companies transition from being asset light to businesses that spend a much higher % of revenue on capex. They cite a Mckinsey estimate that AI spending will reach a cumulative $5.2T over the next 5 years and a Bain estimate that data centers will need to generate $2T by 2030 for companies to see an adequate return on investment.

The Magnificent 7’s exceptional success has been fueled by their asset-light business models. By leveraging intangible assets, such as intellectual property, brand equity, human capital, and network effects, these firms have generated outsized returns without requiring a lot of capital.

As the top panel of the next exhibit shows, the Magnificent 7 have enjoyed enviable returns on invested capital of 22.5%, compared to just 6.2% for the S&P 493 over the past decade. Their higher capital efficiency is explained by their heavier use of intangible assets. As the bottom panel shows, their intangible intensity has been 3-7 times that of the S&P 493.

The piece addresses other concerns such as how concentrated the stock market has become and how AI infrastructure spending may be propping up the economy, as well as drawing comparisons to past booms such as railroads and the internet.

The future and the near term return on investment from all of this AI spending is at the top of mind for many investors, but the piece also highlights how management of big tech seems to be viewing the AI arms race. Less about immediate ROIC and more about who will emerge as leaders of a future where AI is the most important technology in the world.

If we end up misspending a couple of hundred billion dollars, I think that that is going to be very unfortunate, obviously. But what I’d say is I actually think the risk is higher on the other side.”

- Mark Zuckerberg, Facebook CEO

“I’m willing to go bankrupt rather than lose this race.”

- Larry Page, Google co-founder

The Magnificent 7 have a well-deserved reputation as high-quality, ensconced in an oligopoly across key tech markets.

However, AI appears poised to disrupt this cozy oligopoly, at least in the minds of Big Tech CEOs. As Bill Gates himself has argued, AI collapses their respective markets – search, social media, shopping – into one, and whoever wins the AI race wins all markets. Viewing AI as an existential risk, they have been forced into a bitterly competitive and costly arms race.

The AI arms race resembles the classic “Prisoner’s Dilemma” game theory problem. While the optimal move is for firms to mutually agree to moderate their AI investments, preserving their oligopoly, this equilibrium is unstable, as each firm is incentivized to unilaterally ramp up investment to capture the market; if OpenAI goes all-in, the rest cannot afford to sit idly. This results in a suboptimal equilibrium, in which all firms invest aggressively, even if it leads to over investment and the destruction of the collective profit pool.

Return on capital is on everyone’s mind but I think the above is probably the right way to understand the level and rate of spending that we’re seeing. This is the defining technological arms race of our lifetime and as long as the promise of AGI exists, big tech companies will spare no expense in their pursuit of emerging victorious.

This was a sober and thoughtful read and definitely helpful for investors to understand the reality of big tech companies spending more on capex and what that might mean for future returns.

Gavin Baker Interview

For an argument on why AI is not a bubble, Gavin Baker, founder of Atreides Management, gave an illuminating interview with David George at a16z’s Runtime conference. I always enjoy learning from listening to Gavin and admire how he is able to balance his optimism for technology with a sober grounding in financial reality.

He addresses the comparisons to the internet bubble by looking at both valuations and utilization of GPUs compared to the amount of unused fiber that was built during the telecom bubble.

I do not believe we’re in an AI bubble today. I had, depending on how you look at it, the privilege and the misfortune of being a tech investor during the year 2000 bubble, which was really a telecom bubble. And I think it’s helpful to compare and contrast today to the year 2000. Cisco peaked at 150 or 180 times trailing earnings, NVIDIA is at more like 40 times. So valuations are very different. More importantly, however, is that the internet bubble or telecom bubble in 2000 was defined by something called dark fiber.

If you were around in 2000, you’ll know what that was. Dark fiber was literally fiber that was laid down in the ground, but not lit up. Fiber is useless unless you have the optics and switches and routers that you need on either side. So I vividly remember companies like Level Three or Global Crossing or WorldCom would come in and they say, “We laid 200,000 miles of dark fiber this quarter. This is so amazing. The internet’s gonna be so big. We can’t wait to light these up.”

At the peak of the bubble, 97% of the fiber that had been laid in America was dark. Contrast that with today, there are no dark GPUs. All you have to do is read any technical paper, and understand that the biggest problem in a training run is that GPUs are melting from overuse.

He also touches on the increase in ROIC the big tech companies are seeing as evidence on why we’re not currently in a bubble.

And there’s a simple way to kind of cut to the heart of all of this.

It is to look at the Return on Invested Capital of the biggest spenders on GPUs who are all public. And those companies, since they ramped up CapEx, have seen around a 10 point increase in their ROICs. So, thus far, the ROI on all the spending has been really positive.

There’s an interesting and open debate about whether or not it will continue to be positive, with how much spend we’re going to have on Blackwell. (I personally think it will.) But there’s no debate that thus far, the ROI on AI spend has been really positive. And valuation wise, we’re just not in a bubble.

The interview is substantial and touches on topics from market structure, to business models, the infrastructure vs application layer to humanoid robots and more. One part that is particularly interesting is the statement that Google, not other chip or networking companies, is perhaps the biggest potential competitor to Nvidia.

So Nvidia’s biggest competitor isn’t AMD, it’s not Broadcom. It’s certainly not Marvell. It’s not Intel. It’s Google.

And more specifically, it’s Google because Google owns the TPU chip. And - today at least - it’s the only alternative to Nvidia for training. And maybe the best inference alternative.

And Google’s a problematic competitor. Because they also own a company called DeepMind and they have a product called Gemini. And I think you could argue that they’re the leading AI company today. I think they’ve taken 15 or 20 points of traffic share in the last two or three months. That’s just traffic to Gemini; it does not include search overviews. I suspect on an actual traffic basis, Google is bigger than OpenAI, Anthropic, anyone today. And that business is gonna run on TPUs.

And then we have three other labs that are relevant today. There’s Anthropic; that’s an Amazon and Google captive. Anthropic is really going to run on TPUs and Trainiums. And so you’re left with xAI and OpenAI at the forefront. And if Google is going to a lab like Anthropic and saying, “I’m going to help you fundraise and give you chips, for competitive reasons, it’s very hard for Nvidia not to respond. And as Jensen said, he thinks it’s going to be a good investment.

He continues his discussion of Google’s positioning later on in the interview

I think it is really a fight between Nvidia and the Google TPU. And then something that I don’t think is broadly appreciated is the extent to which Broadcom and AMD are effectively going to market together.

Nvidia is no longer just a semiconductor company, as I’m sure you’ll hear from Jensen tomorrow. It was a semiconductor company, then a software company with Cuda, now a systems company with these rack level solutions. And now arguably, a data center-level company with the level of architecting they’re doing with scale up, scale across and scale out networking.

So the networking, the fabric, the software, it’s all important. And what Broadcom is saying to companies like Meta is, “Hey, we will build you a fabric that can theoretically compete with Nvidia’s fabric, which is a mixture of NVLink and either Infiniband or Ethernet. We’ll build it on Ethernet, it’s gonna be an open standard. And we’ll make you your version of TPU, which took Google three generations to get working. And you know what, if your ASIC isn’t good, you can just plug AMD right in.”

I personally believe most of those ASICs are gonna fail, particularly in the fullness of time. I think you’ll see a bunch of high profile ASIC programs canceled, especially if Google starts selling TPUs externally.

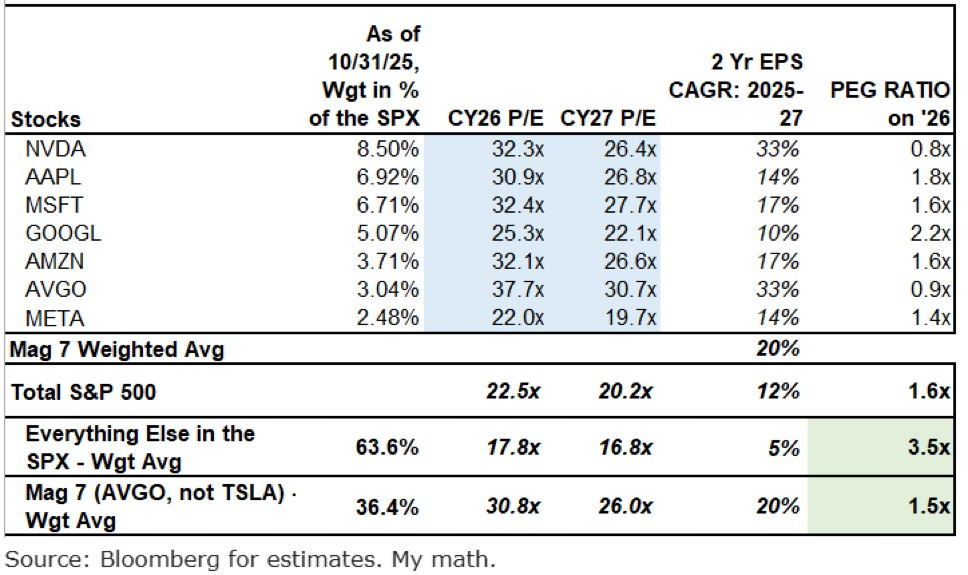

What can we make of all this? It does seem inevitable that the big tech companies continue to ramp capex in the near term as the AI arms race shows no sign of slowing down. It also seems inevitable that the leaders of these companies view the risk of losing the arms race as far worse than overspending. We think this is the proper lens through which to view what’s going on. As far as the bubble talk, there are pockets of the market that are worse than the Mag 7. This chart from BAML shows the Mag7 (with Broadcom instead of Tesla) valuations and projected growth for the next two years. Yes, they are collectively more expensive than the other 493 companies on an absolute basis, but they are also poised to grow earnings 20% vs an average of 5% over the next two years.

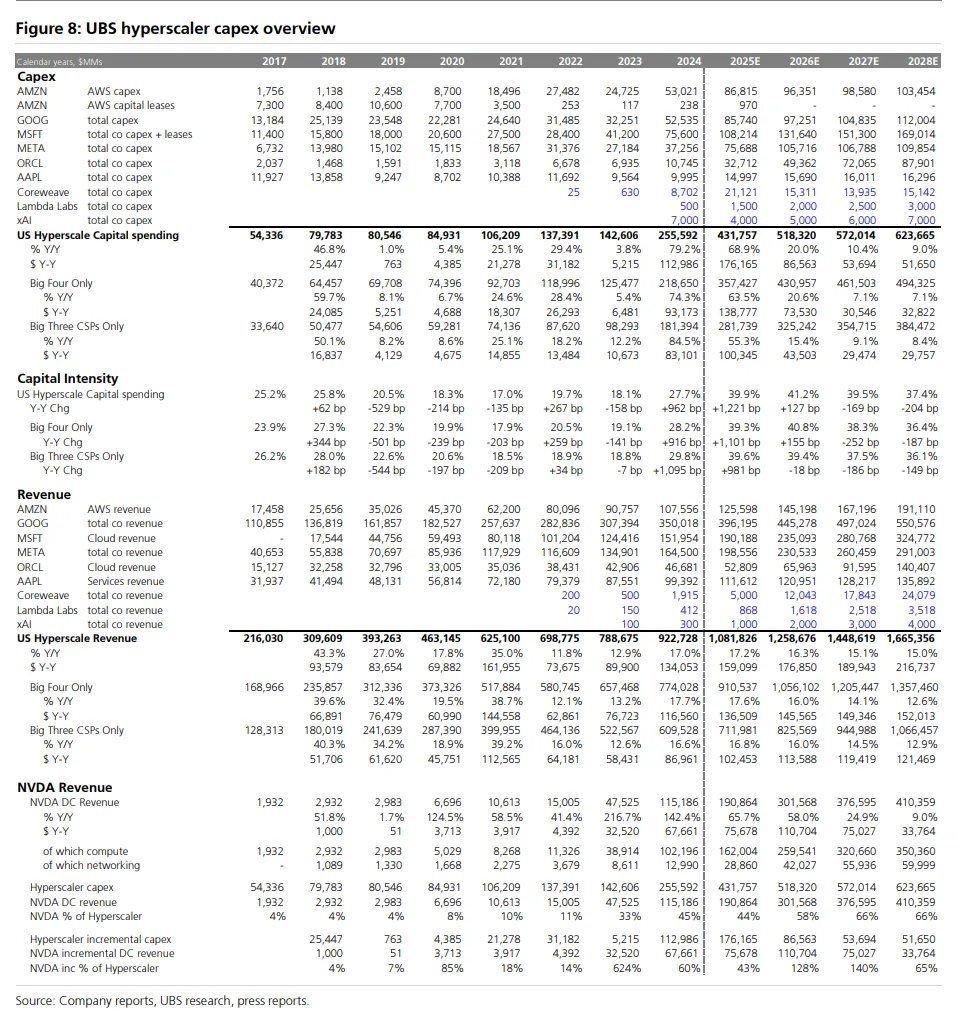

This chart from UBS projects capex for the hyperscalers through 2028, and sees Amazon, Google, Microsoft and Meta all spending over $100B each in 2028 in capex. The projections also predict over $400B of 2028 revenue for Nvidia, the majority of which will come from compute with the rest coming from networking.

Satya Nadella said on the BG2 podcast that the biggest bottleneck for AI isn’t compute, but power and the ability to build data centers quickly, near power. This is an active area of research for us as it seems that AI progress will be inevitably constrained by access to power. It fits well within our framework of identifying bottlenecks and thresholds and this week there were several major deals that highlight how central energy is becoming to AI.

Energy Deals

The pursuit of energy solutions to power the AI arms race is something that is at the top of mind for hyperscalers.

This week saw Google and Next Era teaming up to restart a nuclear power plant

NextEra Energy and Google have reached an agreement to restart an Iowa nuclear power plant shut five years ago, the companies said on Monday, in another sign that data-center power demand is renewing interest in U.S. nuclear energy.

The technology industry’s quest for massive amounts of electricity for artificial-intelligence processing has renewed interest in the country’s nuclear reactors, which generate large amounts of around-the-clock power that is virtually carbon free.

It also saw a landmark deal announced between Brookfield, Cameco, Westinghouse and the United States Government to build $80B worth of nuclear power plants across the country.

At the center of the new strategic partnership, at least $80 billion of new reactors will be constructed across the United States using Westinghouse nuclear reactor technology. These new reactors will reinvigorate the nuclear power industrial base.

As a result of this historic agreement, nuclear energy deployment will be a central pillar of America’s program to maintain global leadership in nuclear power development and Artificial Intelligence.

The partnership will facilitate the growth and future of the American nuclear power industry and the supporting supply chain. Each two-unit Westinghouse AP1000 project creates or sustains 45,000 manufacturing and engineering jobs in 43 states, and a national deployment will create more than 100,000 construction jobs. The program will cement the United States as one of the world’s nuclear energy powerhouses and increase exports of Westinghouse’s nuclear power generation technology globally.

Once constructed, the reactors will generate reliable and secure power, including for significant data center and compute capacity that will drive America’s growth in AI.

The partnership contains profit sharing mechanisms that provide for all parties, including the American people, once certain thresholds are met, to participate in the long-term financial and strategic value that will be created within Westinghouse by the growth of nuclear energy and advancement of investment into AI capabilities in the United States.

The construction of the Westinghouse nuclear power plants was also included in the announcement that Japan will invest $550B in the United States.

Energy-related projects appeared to loom large in the list in terms of total scale of business. Westinghouse’s construction of AP1000 nuclear reactors and small modular reactors was expected to be worth up to $100 billion, involving Japanese suppliers and operators including Mitsubishi Heavy Industries. Another small modular reactors project that could involve GE Vernova / Hitachi was framed as also being worth up to $100 billion.

“These are great companies, many of them household names — that you would expect — that are going to build infrastructure and improve the national economic security of the United States,” Lutnick said, adding that other projects can be considered.

“We look forward to engaging with other companies that wish to provide that same sort of foundational position. We’re going to be examining shipbuilding, pipelines, there’ll be an enormous number of other critical minerals. There are a whole variety.”

If the energy bottleneck for AI ends up reenergizing investment in nuclear power, that’s a win-win for the United States and the companies involved. Nuclear has long promised an abundance of clean energy but has been held back for reasons beyond the scope of this piece. This remains an active area of research for us, as energy is one of the defining bottlenecks in the ongoing AI arms race. It’s exciting to see so much focus on scaling the world of atoms to power the world of bits.

Corporate Earnings

Google

With talks of a bubble so prominent in the media, there is an increased focus on earnings of the big tech companies. In our 2023 piece about the future of networking, we noted how Google’s custom TPU’s and purpose built networking and overall data center architecture put them in a unique position as both a cloud service provider and AI model and application developer. Google has been under lots of pressure as of late because they were seen as being behind in the AI race, despite the transformer having been invented by Google researchers. We are believers that Alphabet is one of the best companies of all time and this quarter was great evidence that they are well positioned to capture value in the ever changing landscape of high technology.

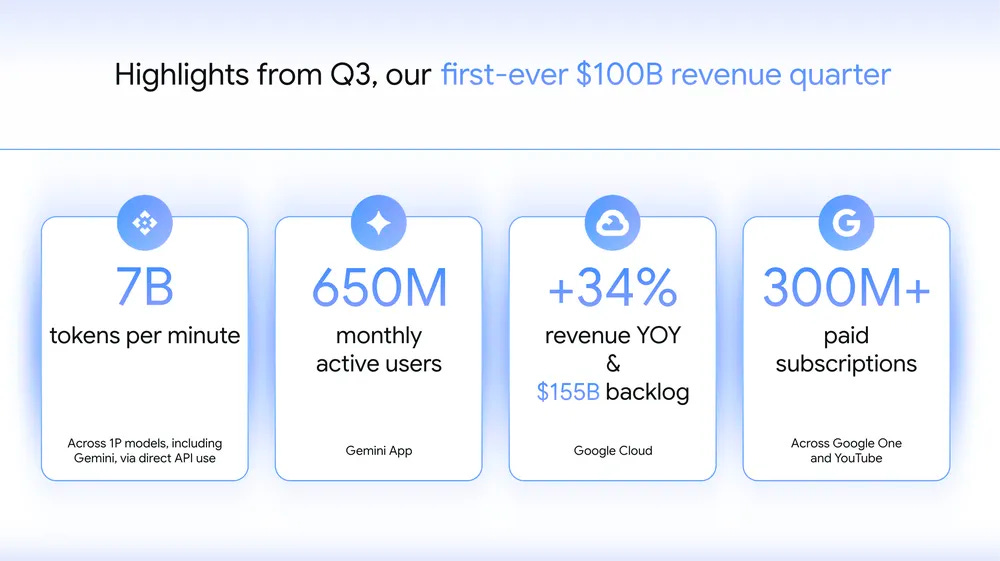

Some highlights from their first ever $100B quarter (wild to say)

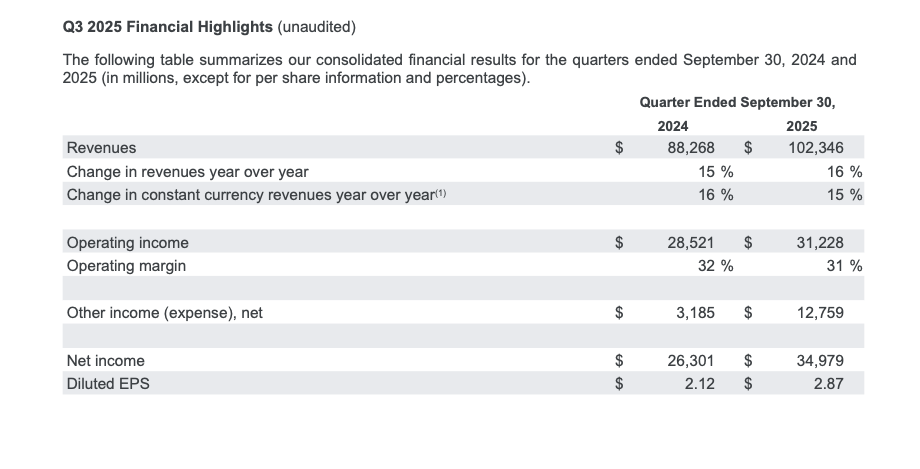

Consolidated Alphabet revenues in Q3 2025 increased 16%, or 15% in constant currency, year over year to $102.3 billion. Google Search & other, YouTube ads, Google subscriptions, platforms, and devices, and Google Cloud each delivered double-digit growth in Q3.

Google Services revenues increased 14% to $87.1 billion, reflecting robust performance across Google Search & other, Google subscriptions, platforms, and devices, and YouTube ads.

Google Cloud revenues increased 34% to $15.2 billion, led by growth in Google Cloud Platform (GCP) across core products, AI Infrastructure and Generative AI Solutions.

Total operating income increased 9% and operating margin was 30.5%. Excluding the $3.5 billion charge related to the European Commission (EC) fine, operating income increased 22% and operating margin was 33.9%, benefitting from strong revenue growth and continued efficiencies in the expense base.

Other income reflected a net gain of $12.8 billion, primarily the result of net unrealized gains on our non- marketable equity securities.

Net income increased 33% and EPS increased 35% to $2.87.

With the growth across our business and demand from Cloud customers, we now expect 2025 capital expenditures to be in a range of $91 billion to $93 billion.

So yes the capex numbers are eye popping. But one could argue that $100B quarters with 30% operating margins afford a company such bets. The blog post is full of exciting developments in all aspects of their business including this note about TPU demand

“Our highly sought-after TPU portfolio is led by our seventh-generation TPU, Ironwood, which will be generally available soon. We’re investing in TPU capacity to meet the tremendous demand we’re seeing from customers and partners, and we’re excited that Anthropic recently shared plans to access up to 1 million TPUs.

Overall this quarter is about everything one would want to see from the company as an investor. Double digit growth across every part of the business. Strong AI usage and shipping at a high pace. Cloud growth with solid margins. We believe that Alphabet remains uniquely positioned to capture value in the AI arms race as both an infrastructure provider and model and application developer; on top of being an exceptional and reasonably diversified technology company.

Amazon

Amazon also delivered a strong quarter. Showing strong cloud growth indicating healthy continuing demand for AI infrastructure. They reported $180B of Q3 sales (13% increase) and $21.2B of net income compared to $15.3B in Q3 2024. This sent shares up 11% after the report.

“We continue to see strong momentum and growth across Amazon as AI drives meaningful improvements in every corner of our business,” said Andy Jassy, President and CEO, Amazon. “AWS is growing at a pace we haven’t seen since 2022, re-accelerating to 20.2% YoY. We continue to see strong demand in AI and core infrastructure, and we’ve been focused on accelerating capacity – adding more than 3.8 gigawatts in the past 12 months. In Stores, we continue to realize the benefits of innovating in our fulfillment network, and we’re on track to deliver to Prime members at the fastest speeds ever again this year, expand same-day delivery of perishable groceries to over 2,300 communities by end of year, and double the number of rural communities with access to Amazon’s Same-Day and Next-Day Delivery.”

Some other highlights:

Saw continued strong adoption of Trainium2, its custom AI chip, which is fully subscribed and a multi-billion-dollar business that grew 150% quarter over quarter.

Launched Project Rainier, a massive AI compute cluster containing nearly 500,000 Trainium2 chips, to build and deploy Anthropic’s leading Claude AI models.

Announced new Amazon EC2 P6e-GB200 UltraServers using NVIDIA Grace Blackwell Superchips, designed for training and deploying the largest, most sophisticated AI models.

AWS 20% growth, revenue of $33B and operating income of $11.4 which was about 2/3 of the company’s total operating profits.

Amazon’s ability to execute at their scale is incredible, and while they have been under considerable pressure from investors about their ability to win AI deals, their Trainium chips and plans for $125B in capex puts them in a solid position following these earnings.

The focus on big tech earnings is understandable considering how much of the index they represent (Microsoft, Meta, Amazon, Alphabet and Apple represent about 25% of the index and all reported last week.)

Meta & Microsoft

Meta’s earnings were skewed by a one time tax hit, but the stock fell over 9% as a result of a higher 2025 capex guidance ($70-$72B) and a 300bps decrease in operating margin (43% to 40%) despite $51.2B in Q3 revenue, a 26% increase from last year, which underscores strength in the core business. A cursory read of the move is that the market questions whether Meta can earn a satisfactory return on the capex as the cloud service providers.

Microsoft posted strong results, with revenue of $77.7 billion (up 18% year-over-year) and EPS of $3.72, both ahead of expectations. Growth was led by the Microsoft Cloud segment, which reached $44.4 billion in revenue, up 29%, with Azure and other cloud services growing about 40%. Despite the beat, the stock slipped roughly 3% as investors focused on the company’s steep $34.9 billion in quarterly capex and management’s signal that spending will accelerate further in FY2026. The results reinforced Microsoft’s leadership in AI infrastructure, but also highlighted the market’s growing demand for evidence of durable margins and returns on this wave of investment.

Roundup

Some other interesting pieces from this week:

Bain highlighted a case of a private equity firm that was going to buy a software company. During diligence, they used AI to build a prototype similar to the company’s product and thought it was better so they didn’t buy the company.

Anthropic launched Claude for Excel

Substrate launched with the ambitious plan of not just creating a new lithography tool, but building their own fab in the US. Semianalysis, one of my favorite publications, wrote an excellent piece covering the company.

The Fed cut interest rates by 25 bps bringing the fed funds rate to 3.75-4% but Powell stated that future rate cuts are “far from a foregone conclusion.” And notably, the committee had one member dissent in favor of a 50bps cut and another in favor of no cut.

1x launched NEO, a home robot you can buy for $20k.

And Elon said SpaceX will be building data centers in space.

This week was busy with discussions of the state of the market and whether AI is a bubble, landmark energy deals, corporate earnings, a Fed decision and some exciting startup launches amongst many others. This format is new for me and I imagine it will continue to evolve. I’ll do my best to continue surfacing interesting things I come across in the process of my research and just as a result of being terminally online. As always, my dms are always open for interesting things along these lines!

Very valuable! Thank you

Thanks for the summaries, some very awesome sources here! :)